Survey Classification

Data Generation Process Workshop

Yue Hu

Tsinghua University

Goal

What to Achieve

- Filter relevant questions from the survey pool

- Classify the questions into themes

- Verify assigned themes

Question Filter

Survey Selection

Download the pool of surveys from the

dcpotoolswebsiteHave a look at the downloaded spreadsheet

- Select

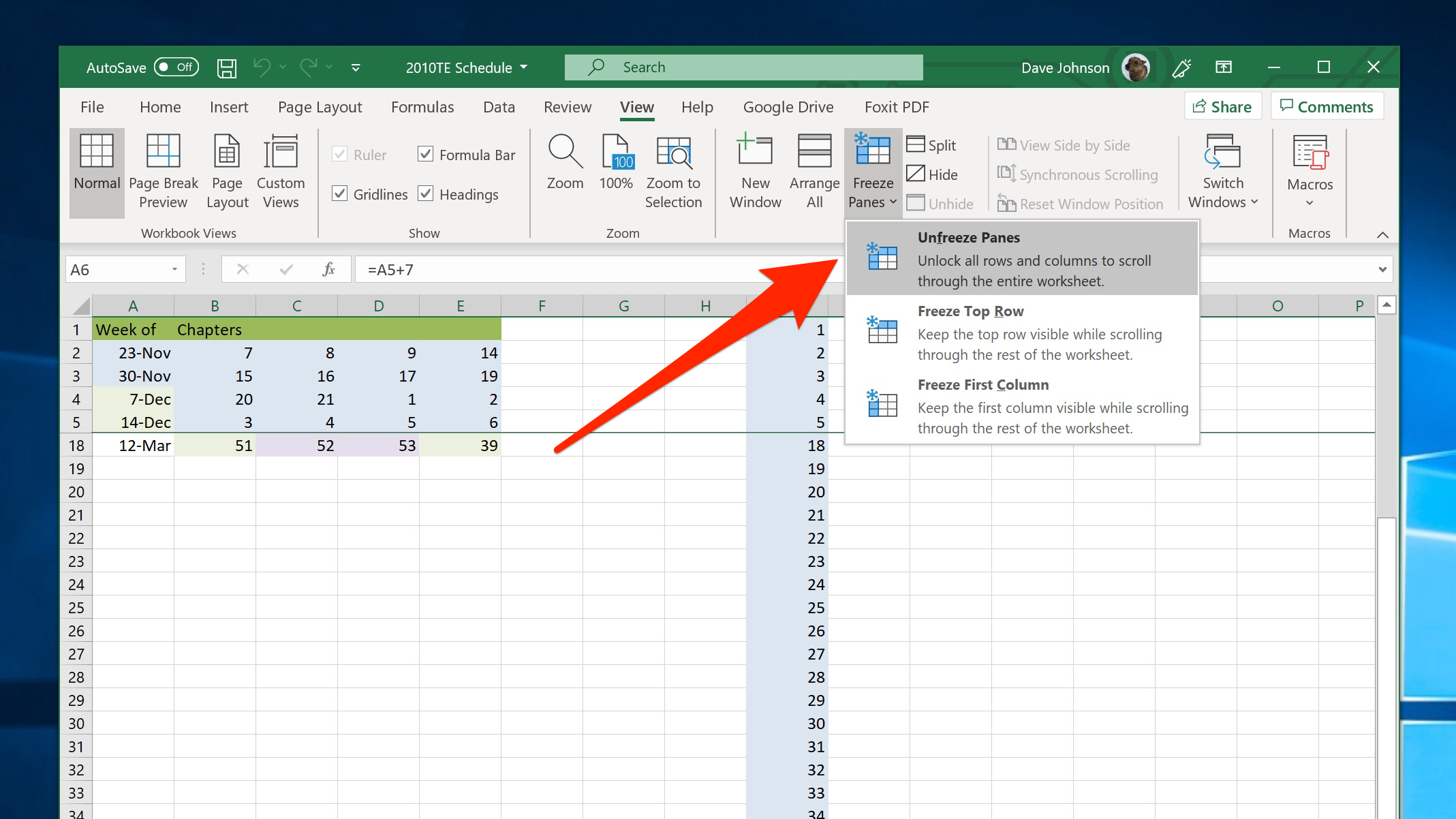

Freeze PanesfromWindow![]()

- Start from the cross-national surveys (

country_varis empty)

- Select

Survey Achoring

- Start from Column

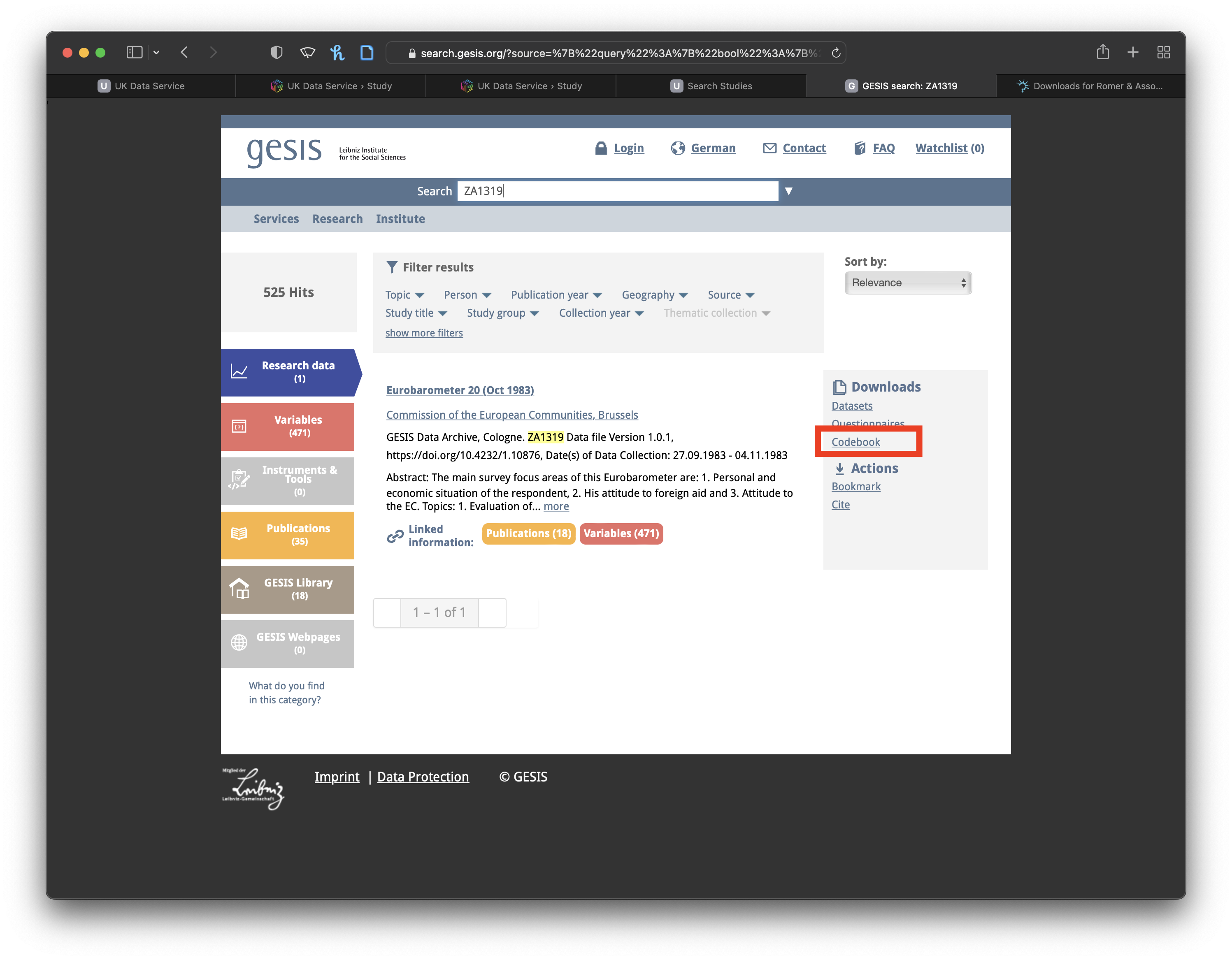

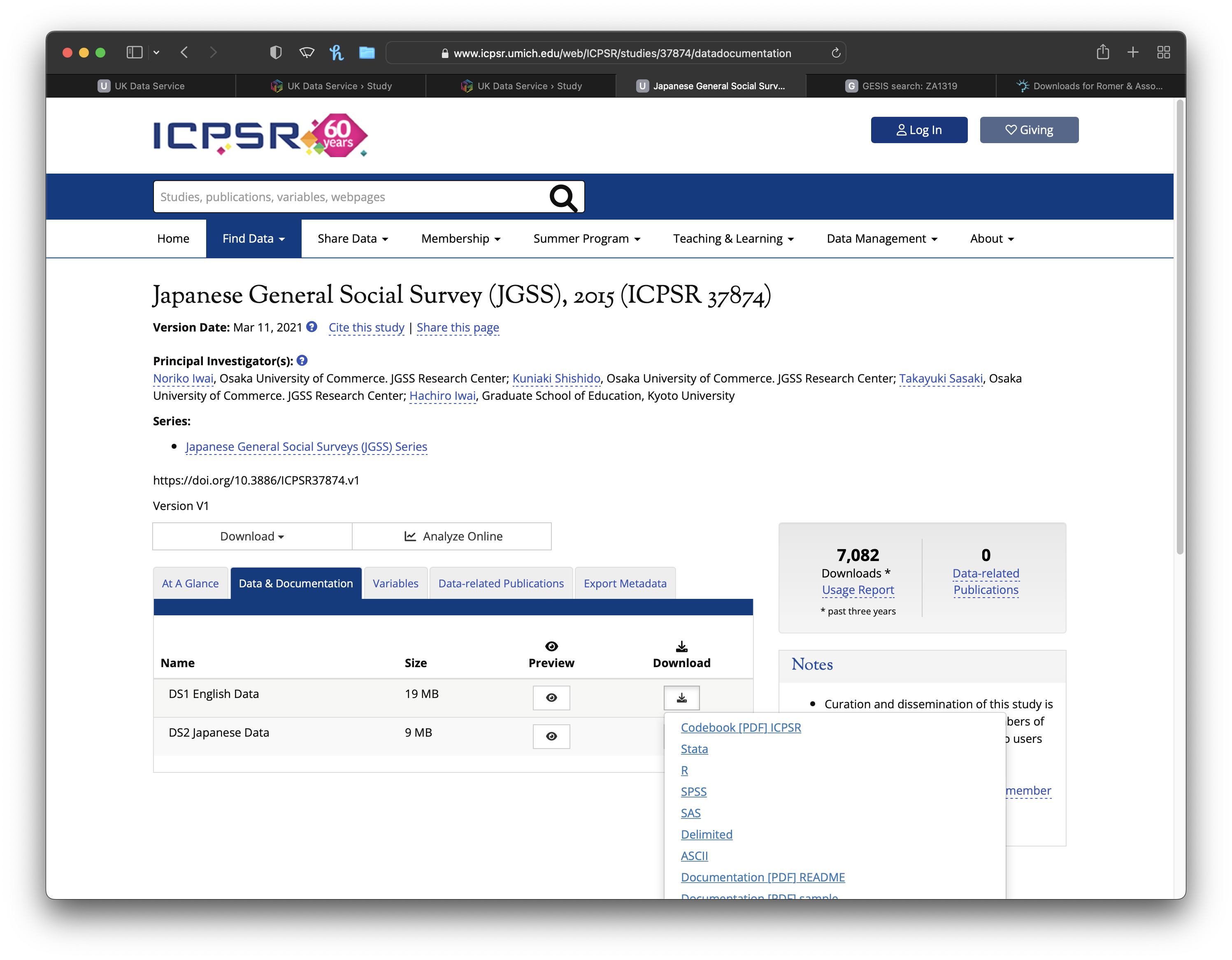

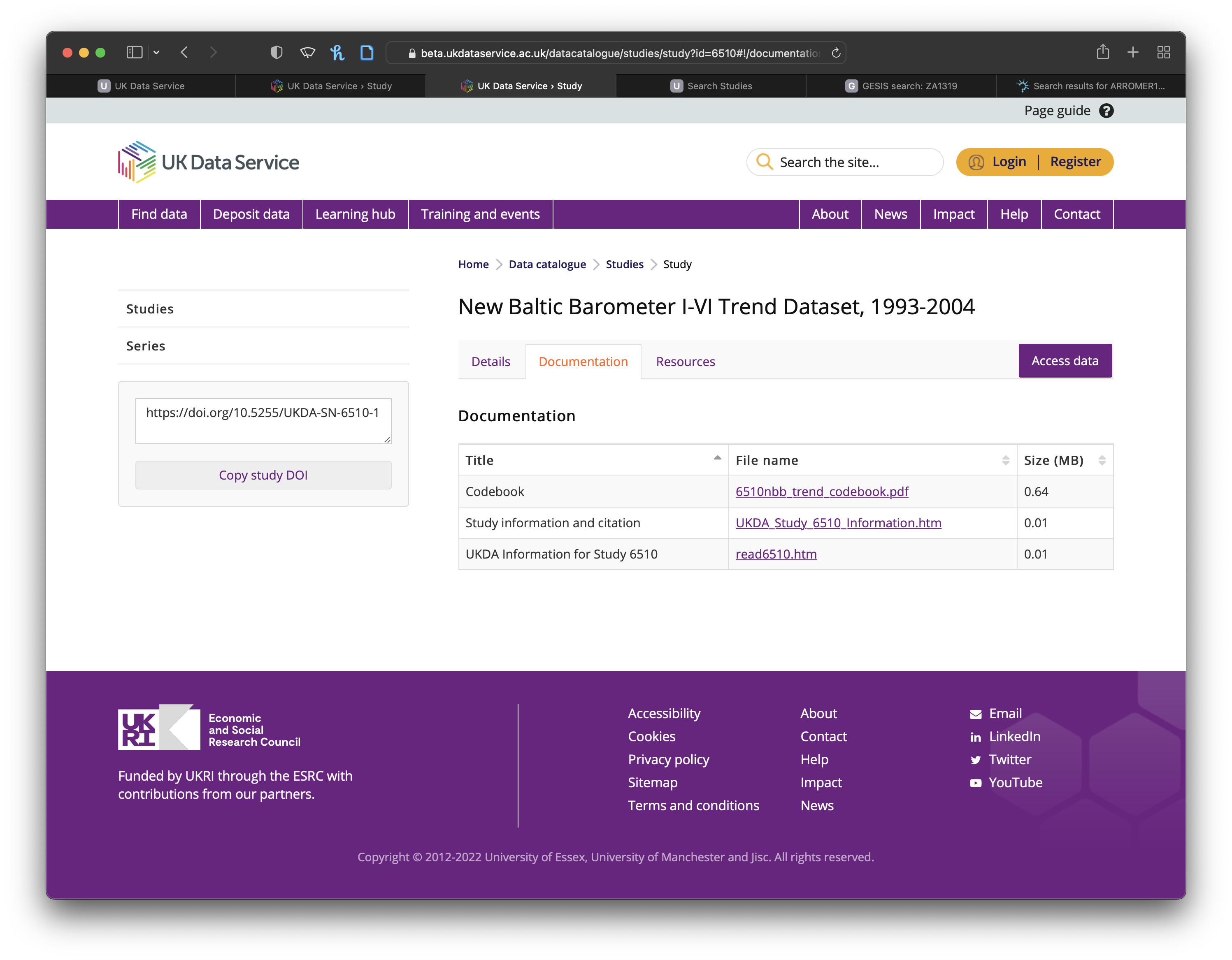

archive- First on

dataverse,gesis,icpsr,roper, orukdslabels - If

dataverse, use thedata_linkto achieve the questionnaire - If others, go to the survey website

- Search the

file_idin the search box- If nothing comes back, try the numeric part only

- More clicks may be needed

e.g., clicking “Studies” (icpsr) or “Studies/Datasets” (roper)

- Search the

- First on

Codebook Downloading

Question Selection

- Search keywords relating to your topic

- e.g., for gender egalitarianism, you can search for “wom,” “husband,” “wife,” etc.

- Look around the questions around the questions you found

- Look over the index of questions

Question Recording

Download the template from the DCPOtools website.

survey:surveyin thesurveys_dataspreadsheet;variable: The question index, e.g., “q56,” “v122”;question_text: The complete sentences read to the people taking the survey, or as close to that as you can find;response_categories: The number and the label of each of the options, e.g., “1. Strongly agree, 2. Agree, 3. Neither agree nor disagree, 4. Disagree, 5. Strongly disagree”.

If, you’re sure there are no relevant questions in the survey, enter the survey and put “NA” under variable, and move on.

You may want to go over one archive at a time.

If there’s anything you think is important but unable to structuralized, put them to the note column.

Question Clustering

Classification

Three people per group, one group per topic.

- Immigration

- Inequality

- LGPTQIA+

- Read the questions through (at least 1/3 each of an archieve)

- Categorize them into three topics

- Using a term to represent each topic

- Mark the topic for each question

- Talk with your partners to justify/modify your categorization system to be a consistent one

- Mark all the questions

- Measure the intercoder reliability (ICR) with Fleiss’ κ to determine if you need to categorize it again

Tips

Make sure recording the full sentence of the questions

According to Landis & Koch (1977), let’s aim 0.8.

A high κ is not the ultimate goal, a.k.a., no! fake! consistency!

Twice communications with your partners:

- After you are famililar with the data and communicate to nail down the categorization system

- After calculating the ICR and try to figure out the problem if the κ is low.

Make sure you record the data in the same way.